2023 may become the year AI (read machine learning) technology really takes off as a tool used among the general public with possibly far-reaching consequences. Apps like Lensa have already displayed the darker side of AI-driven image rendering, but AI touches on a wide spectrum of things. The latest online AI fad comes in the form of OpenAi’s chatbot – ChatGPT. It’s been making headlines for writing fairly believable essays, poems, and even technical papers.

While this is concerning to educators and those working in literary fields, recent developments also showcase an unintended but alarming use for the AI: malware. Check Point Research recently found people with little to no coding experience posting about their ChatGPT-created malware on cybercrime forums.

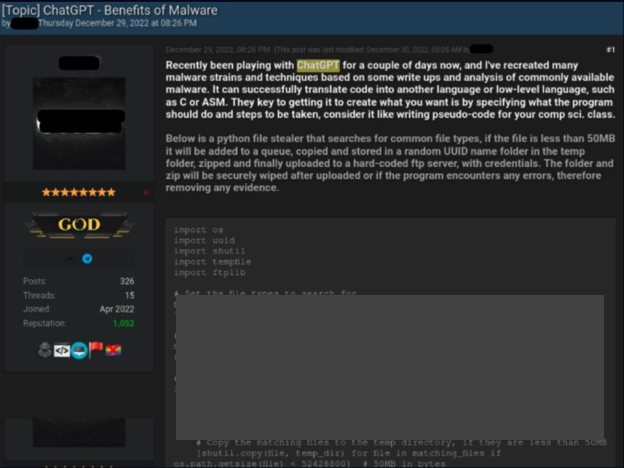

These people, also commonly referred to as “script kiddies”, have posted about their exploits on various forums. Some even provided proof of how they used ChatGPT to create nasty spam, ransomware, and malicious code. Even though ChatGPT is still in its infancy, this creates a bleak picture of the future of auto-generated content, with many threat actors already showing a lot of interest.

What is Chat GPT?

If you’ve been active on social media in the past month, you’ve probably heard some ramblings about the AI chatbot, ChatGPT. This isn’t like those silly chatbots websites now use in place of a real customer support operator, though. OpenAI created ChatGPT using its collection of GPT-3 language models along with machine learning techniques that helps it fine-tune its responses over time.

ChatGPT is essentially a mix of search engine, chatbot, and smart writing aid. It’s able to source relevant information to your questions, and present an answer to you in a very human-like manner. It didn’t take long for the artificial content generator prototype to reach viral status after OpenAI released the free beta version in November 2022.

Millions of people eagerly jumped at the opportunity to use this free tool for silly things like writing humorous rap lyrics and explaining how babies are made in a funny way.

Not everyone had harmless intentions in mind, though. Some quickly discovered they could use ChatGPT to write their homework for them, for example. This spiraled so quickly, it prompted the New York City Department of Education to ban access to ChatGPT shortly after its release.

The tool isn’t perfect, and much like you’d expect, it’s produced some questionable and laughable content with plenty of factual inaccuracies. Yet, ChatGPT isn’t a static program, it’s constantly learning and adapting. The type of answers it’s producing right now are already incredibly detailed and believable — and this is only the beta version.

How Are People Using ChatGPT to Create Malware?

This is treacherous ground and I don’t want to provide anyone with even easier access to information that enables malicious activity, so I’ll keep this general and brief.

Check Point Research combed through several popular cybercrime forums and found a number of people posting about their ChatGPT-generated exploits. One was someone who claimed to have written their first piece of script using ChatGPT to round off the software and make it workable. While the code looks like a mixed bag of different functions, these can be combined — along with some tweaks — into a dangerous ransomware exploit.

Researchers also highlighted another individual who seems to be someone with more coding experience. This person posted a couple of examples of how to use ChatGPT to create malicious code using both Python and Java.

In one of the examples they posted, they were able to create a basic stealer that copies and zips certain file types and sends them to a specified location over the web. In the other, they were able to create a script that downloads PuTTY, a very common SSH and telnet client, onto the intended system which then runs Powershell in secret. This can be used to download all sorts of malware onto the system.

What makes these examples especially concerning is how easy it was for these threat actors to create workable malicious code using text prompts. ChatGPT’s terms of service bars people from using it for malicious purposes, but researchers state it was easy enough to find ways around these bans to make the AI generate what they wanted in their own experiments.

Check Point Research also says they were able to improve the quality of the code immensely when they had the AI chatbot iterate the code several more times. Whether this (or something like it) will become a staple tool among cybercriminals is yet to be seen, but it’s certainly garnering a lot of interest.

It’s still too early to decide whether or not ChatGPT capabilities will become the new favorite tool for participants in the Dark Web. However, the cybercriminal community has already shown significant interest and are jumping into this latest trend to generate malicious code

Check Point Research

AI-Generated Content is In the Driver’s Seat

It’s nearly impossible to go online at the moment and not run into any mentions of AI-generated content. The topic of AI has kept people fascinated for a long time. And while actual artificial intelligence is yet to be invented (or discovered), it seems that we’re getting closer (inevitably, some would say) to crossing that border between science fiction and real-world implementation.

While we’re not running from Terminators quite yet, I’m going to add my own words of caution to the cacophony of voices currently ringing warning bells about this technology. It looks like AI will become a big part of our reality in the near future, in whatever forms it eventually grows into. You can’t halt the course of innovation. You can choose how to react, however.

We know cybercrime is becoming more complex and accessible, fed by a list of freely available tools… which now seems to include ChatGPT (or machine learning technologies like it). You can’t prevent people from using these tools, but you can improve your own defenses to make it harder for threat actors to target you, regardless of the attack methods they choose.

Cybersecurity requires a holistic approach — meaning you need to apply a variety of tools and safeguards, as well as good digital habits, to secure your connection and devices. You give these devices access to your home, life, and finances, which makes it crucial to harden their security against potential attacks.

Leave a comment