It isn’t just Google that’s been scrambling to keep up after the public beta release of ChatGPT in November 2022. Governments around the world have also been working through scenarios on how to deal with the sudden growth of new technologies. Both the US Department of Homeland Security (DHS) and European Data Protection Board (EDPB) have now set up AI task forces to deal with the implications of AI — albeit in different ways.

ChatGPT and other generative AI tools have caused broad debate among the public and private sectors about how artificial intelligence technology should be regulated and used. ChatGPT has seen overwhelming adoption in the last few months, reaching 100 million active users in January 2023 according to Similarweb, and its impact is far-reaching.

Many are questioning how AI will change the way we deal with privacy, cybersecurity threats, the spread of false information, education, and work. The US DHS and the EDPB seem to be approaching this puzzle from different angles, though. While the EUPB is cautious and wants to explore ways to restrict AI tools like ChatGPT, the DHS seems very interested in exploring how to use the tool to its benefit.

The US Adopts AI As Part of It’s Defence Arsenal

US DHS Secretary Alejandro Mayorkas announced the creation of an artificial intelligence task force at the State of Homeland Security address for the Council on Foreign Relations in Washington DC last week.

The security agency plans to utilize AI in various ways. This includes protecting critical infrastructure like running water supply plants and electrical grids from state-sponsored hacking groups. This isn’t surprising, given the Colonial Pipeline ransomware attack in 2021 and the Oldsmar’s water treatment plant attack in 2022. Schools and hospitals are also extremely popular targets for cybercriminals.

The agency also plans to use this technology to screen cargo to “enhance the integrity of the nation’s supply chains.” According to the DHS, AI can help with identifying goods made using forced labor and with reducing the flow of drugs.

Apparently, the agency plans to leverage the AI to detect drugs like fentanyl as well as to track chemicals used to make the drug “around the world.” On top of that, the agency wants to use the AI to “disrupt key points of drug operations belonging to the criminal supply chains.”

Interestingly, threats originating from China was one of the other key talking points at this DHS address. Among other things, it mentioned defending critical infrastructure against China and protecting against “PRC malign economic influence.” This seems to align with the US’s apparent stance on foreign technology, which Congress is seeking to limit through its RESTRICT Act.

At the same time, the US government is showing a clear interest in using technology to its own advantage — so long as it’s based locally, apparently. Congress recently bought 40 ChatGPT Plus licenses to start experimenting with generative AI. While it’s keeping the names of the agencies it’s distributed these licenses a secret, it’s very possible the DHS is one of them.

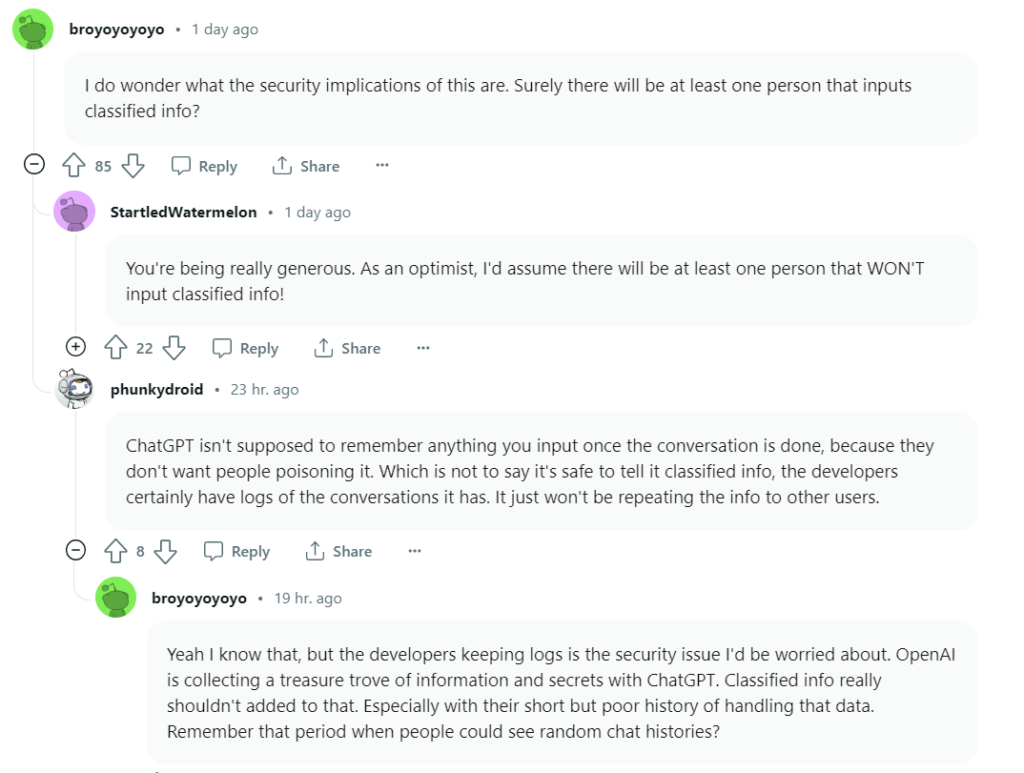

Source: Reddit

The EU Takes a More Cautious Approach, At Least Officially

OpenAI’s ChatGPT has caught the attention of EU countries, but not in a good way. Italy’s data protection agency, Garante, blocked ChatGPT and the tool has been offline in the country since the end of March. The agency gave OpenAI until the end of April to meet a list of privacy-related demands in order to resume its services in the country.

Apparently, Germany’s commissioner for data protection is considering similar steps. After the EDPB meeting which discussed ChatGPT and similar technologies on Thursday, Spain’s AEPD watchdog also said it’s launching an investigation into the tool. According to the AEPD, this preliminary investigation will focus on potential data breaches by ChatGPT.

The EDPB is an independent body composed of several national data watchdogs that oversees data protection rules in the European Union. The EDPB also decided to launch a dedicated AI task force last week aimed at exploring possible enforcement actions conducted by data protection authorities.

According to a source quoted by Reuters, last week’s EDPB meeting, which also announced the task force, included policy experts who presented opinions and ideas. According to the Reuters, the member states will aim to align their policy positions but admits this will take time.

EU lawmakers are also discussing the introduction of an Artificial Intelligence Act, which would regulate every product and service using AI, and classify different AI tools according to their perceived level of risk. Even though most of the AI news coming from EU-based agencies take a preventative tone right now, it’s entirely possible (and very likely) EU governments might end up using this technology in similar ways to the US.

AI Is Already Changing The Online Landscape

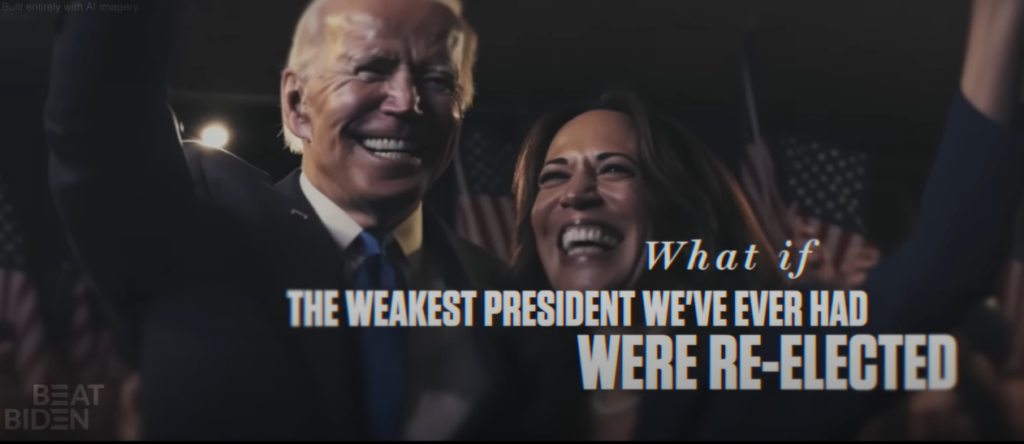

We’re already seeing how AI can potentially impact society going forward. Students are using ChatGPT to cheat on homework, the media is testing AI-generated blog posts, and people with almost no hacking experience are using generative AI tools to create malicious code. Even US Republicans have joined in with an AI-created ad aimed at convincing people what the future would look like if Biden is re-elected.

AI also poses one of the next big hurdles in cybersecurity in the form of attacks launched by quantum computers. The answer is post-quantum cryptography which incorporates machine learning in the development of cryptographic systems for classical computers that can prevent these attacks. We’re not quite there yet, but this reality isn’t far off.

We must address the many ways in which artificial intelligence will drastically alter the threat landscape and augment the arsenal of tools we possess to succeed in the face of these threats,

DHS Secretary Mayorkas said.

Some speculate we’ve already reached the point of technological singularity — a hypothetical point in time where technological growth becomes uncontrollable and irreversible. The idea is this leads to unforeseeable changes and potentially increased threats as the technology continues to develop. Given the direction things are going, this doesn’t sound impossible.

At the end of the day, AI works with data, including all the data you willingly (and unwillingly) share on the web. This includes all of the information you share every day through your browser, websites, accounts, and apps. While you can’t control what type of technology companies invent, you can limit the amount of information they gather about you. In the long run, this will help curb the ways in which criminals and third parties can target you.

Use CyberGhost VPN to secure your connection and prevent third parties from following your every move on the web. Tools can’t do all the work for you, though. You’ll have to improve your digital hygiene and security, and limit the amount of information you share online to improve your privacy.

Leave a comment